Adaptive Lidar Odometry And Mapping For Precision Agriculture

Full Description

Background

Acquisition of detailed and accurate maps in tree crops is important for precision agriculture. Given the dynamic nature of the farming environment, such maps need to be updated regularly - a process that can be automated by mobile robots. However, detailed reconstruction with ground robots has two key challenges - providing real-time odometry and obtaining accurate maps of tree crops.

Technology

Prof. Karydis and his team at UC Riverside have developed a complete, LiDAR only odometry and mapping (LOAM) framework for real-time, robust operations of a ground robot in complex agricultural environments. The framework has a novel, adaptive mapping technique that integrates the stability of robot motions and the consistency of LiDAR observations into mapping decisions. The key component of this framework is the Adaptive Mapper which can dynamically adjust when and what to update the map as a keyframe.

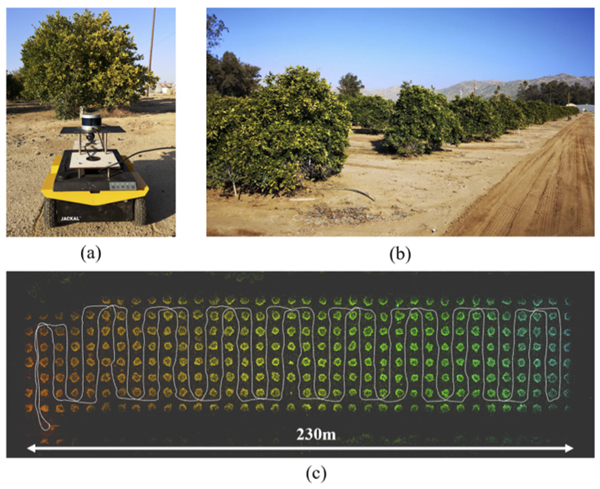

(a) The Jackal mobile robot used in this work shown at its departure (initial) position in the field. (b) A sample view of the agricultural fields at the University of California, Riverside, where experiments were conducted. (a) Sample mapping result of proposed Adaptive LOAM algorithm superimposed by the estimated trajectory.

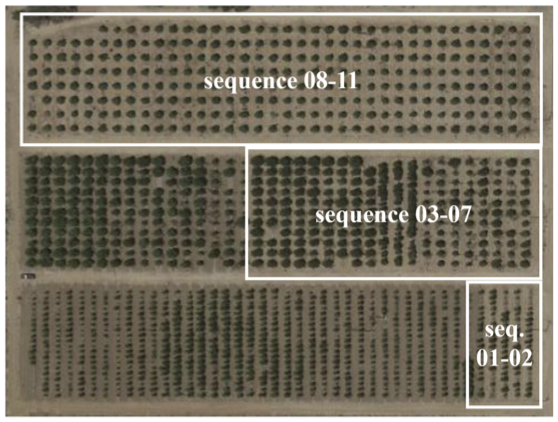

The composed satellite image of the agricultural fields where dataset was collected (courtesy of Google Maps). Areas corresponding to each sequence are highlighted.

Advantages

Compared to the state-of-the-art methods, this new framework:

- The only one to achieve centimeter level accuracy in mapping and localization.

- The only one that allows the robot to accurately return to the starting point.

- Maintains an accurate map at all times and leads to a highly accurate odometry estimation.

- Comparable computational efficiency.

- Uses only a single LiDAR sensor instead of relying on a combination of sensors.

Suggested uses

Accurate mapping and odometry for robots used in precision agriculture

Testing

The team has implemented the framework and demonstrated its accuracy and robustness by conducting experiments in real-world agricultural fields at UCR's Agricultural Experimental Station.

Inventor Information

- Please see all inventions by Prof. Karydis and his team at UCR.

- Please visit Prof. Karydis's group website to learn more about their research.

- Please read recent news coverage about Prof. Karydis at UCR.

Patent Status

Patent Pending

Contact

- Venkata S. Krishnamurty

- venkata.krishnamurty@ucr.edu

- tel: View Phone Number.

Other Information

Keywords

precision agriculture, odometry, agricultural field mapping, LiDAR, mobile robot, tree crops, agtech